クエリの見直しから始める検索改善のヒント

検索ベースのプラットフォームの世界では、常に適切なタイミングで適切な情報をユーザーに届けることが重要でした。また、機械学習(ML)や人工知能(AI)などのテクノロジーの台頭により、ユーザーに関連性の高いコンテンツを提供することが、企業にとってこれまで以上に最優先事項となり、差別化を図る上でも効果的になっています。しかし、プロダクトリーダーがこのようなユースケースでML/AIの有効性を正確に測定し、最終的に改善するにはどうすればよいのでしょうか。

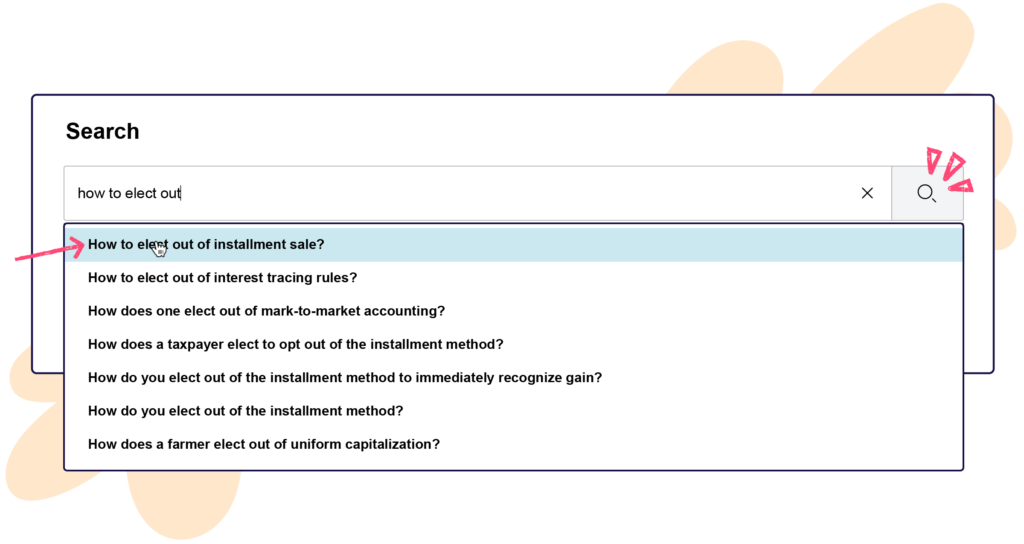

Thomson Reutersのプラットフォーム全体が検索機能の力に基づいて開発されています。同社のCheckpoint Edge製品は、税務と会計の専門家が調査を行い、必要な情報を迅速かつ効率的に見つけることを可能にします。Thomson Reutersの元プロダクトオーナーであるVinay Shukla氏は、「検索プラットフォームとして、ユーザーが用語を入力する検索ボックスがあります」と説明します。「また、ユーザーが[検索]用語を入力し始めるとドロップダウンする『自動候補ボックス』と呼ばれる機能もあります。ユーザーの入力に基づいて推奨される検索を提案し始めるAI機能を開発しました。」

Shukla氏とチームは、この自動提案機能がどの程度うまく機能しているかを測定する方法を必要としていました。また、ユーザーが製品の検索機能にどのように関与しているかという行動的側面と心理的側面にも焦点を当てたいと考えていました。「私たちは、ユーザーが入力停止、キー入力を止めたり、私たちが提案したクエリをユーザーが修正する際の状況を理解したかったのです」と同氏は説明します。「このような情報は、明示的データと暗黙的データを大量に必要とするAI/ML機能を将来維持する上で非常に役立ちます。」

クエリをクエリ

Shukla氏と彼のチームは、ユーザーの検索プロセスにおける3つの異なる重要な基準に注目し、Pendoのトラックイベントを活用してそれらを収集しました。その基準とは、(1)ユーザーがデータ入力を中止した際の最初の入力内容(2)自動提案ドロップダウンクエリから何かを選択したかどうか(3)ユーザーの最終的な検索内容です。「この3つの情報を用いることで、ユーザーがクエリを変更しているか、そしてクエリに何を入力しているかを分析する広大な世界が開かれつつあります」とShukla氏は語ります。

彼は、この3つの追跡アプローチにより、チームがユーザーの行動をより深く掘り下げ、最終的に機械学習/AIアルゴリズムのパフォーマンスを向上させることができると述べています。「ユーザーはタイピングをやめたか、自分たちの質問がすでに検索可能な状態にあることに気づいたかなど、トラックイベントを使えば、自動提案機能がどの程度うまく機能しているかを可視化できるだけでなく、AIによる提案を表示するためにどれくらいの情報が必要かを把握することもできます」とShukla氏は語ります。

Shukla氏は、Pendo内でのイベントの追跡は従来の手法から大きく進化したと述べています。以前の方法では、ユーザーの検索が実際に実行される瞬間については追跡できていませんでした。「Pendoを使うことは素晴らしいです。技術者ではない人でも、Pendoのダッシュボードからデータを取得し、ピボットを作成できるのですから。データサイエンティスト以外の人でも、本当に迅速に分析ができるようになりました」と彼は話します。

The ability to track these events and understand user engagement has also been hugely beneficial for Thomson Reuters’ editorial team. “We have editors that write content for our platform in-house,” Shukla explained. “They’ve been greatly empowered to analyze and take a look at what types of queries users are putting in. If they actually helped to curate some of those queries, they’re now able to see feedback on what alternatives users are searching for as well. So there’s been a lot of empowerment through this particular track event.”

Shukla and his team now leverage these tracked events to measure success in—and improve the functionality of—the Checkpoint Edge product. “If a user selects content from the dropdown, then that feature is a success,” he explained. “It has created a lot of opportunities for us to analyze the types of queries our users are actually inputting versus what they might have seen in the dropdown. It’s a chance for us to figure out if we need to enhance the feature or take things back to the drawing board if queries are serving up irrelevant information.”

Shukla氏のチームは、ユーザーフィードバックとアナリティクスを関連付けることで、自動提案機能の成功を測定する方法に、より深いコンテキストも追加することもできました。特定の追跡イベントを完了したユーザーのセグメントを作成し、そのユーザーのネットプロモータースコア(NPS)レポートを生成して、追跡イベントに関与していないセグメントと比較することで、この機能がユーザーのセンチメントに与える影響をより深く理解できます。「この機能を使ってNPSの観点からユーザーの反応の傾向を把握することは、私たちにとって本当に重要でした」とShukla氏は説明しました。

Finally, Shukla noted that the ease of data extraction from Pendo has been a huge win. “The biggest benefit is being able to put that raw data in front of the people doing the work,” he said. “It doesn’t take a lot of effort or a huge learning curve to pull the data down and filter on queries that are hot in the market right now—especially when it comes to knowing what our users are searching for.”